Deep Dive into Long-Term Data Backup with AWS S3

Dhaval Nagar / CEO

In current time where data is not just an asset but the lifeblood of business strategy and operations, managing and securing long-term backups becomes a critical concern. This detailed guide aims to highlight on a practical approach to long-term data backup, using a specific use case of 30 TB of data intended for infrequent access.

Our focus will be on Amazon Web Services (AWS), particularly leveraging Amazon S3 Glacier for cost-effective, long-term storage. This guide will serve as an insightful resource for both beginners and seasoned IT professionals.

Understanding the Use Case

A customer with a specific requirement to backup approximately 30 TB of data for long-term purposes presented several challenges and opportunities:

- Infrequent Access: The data is accessed 2-3 times a year, indicating a need for a storage solution that is cost-effective for infrequent access yet reliable and secure.

- Accessible Pre-Backup Window: There is a 7-day window where files are accessible before being marked for backup. This window provides an opportunity for data validation or last-minute access before long-term storage.

- Moderate Retrieval Time: Immediate access is not a necessity, but excessively long retrieval times (e.g., several days) are unacceptable.

- Infrequent access: Once the backup data is stored, the probability of accessing those data is limited to 2-3 times per year.

- Security and Access control: There are many other long-term storage providers, but in our case the customer wanted to ensure high security and privacy, hence decided to continue utilizing the AWS infrastructure for long-term storage.

Given all these parameters, AWS Glacier emerged as the perfect candidate for this use case.

Grouping and Glacier: A Strategic Approach

Grouping Backup Files

The first step involves grouping backup files, which simplifies both management and retrieval. Grouping can be based on file type, project, or date, facilitating easier tracking and management. This approach reduces complexity and enhances efficiency, particularly when dealing with large datasets.

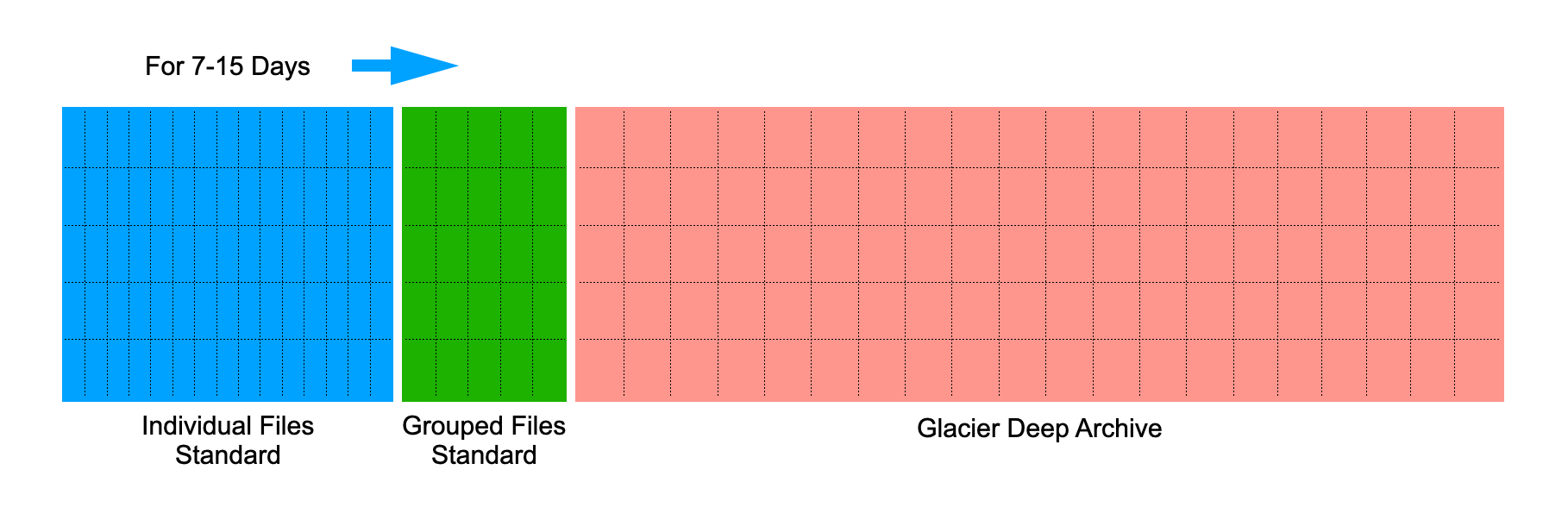

Given the 7-day window before files are marked for backup, we implemented a staging area using Amazon S3 Standard. During this period, data can be reviewed, ensuring that only necessary data is moved to long-term storage, thus optimizing costs.

Recommendation: In our experience, sometimes creating a logical group of files is much more efficient when you need to retrieve them. For example, instead of saving large number of small size files vs small number of large zip files in the logical group of Application ID and Date Range. You will save huge on the Minimum Size, API Calls and Retrieval costs in the future.

If you have a large number of small objects that must be restored collectively, consider the cost implications of the following options:

- Using a lifecycle policy to automatically transition objects individually to Amazon S3 Glacier

- Zipping objects into a single file and storing them in Amazon S3 Glacier

Leveraging Amazon S3 Glacier

Amazon S3 Glacier is designed for 99.999999999% (the famous 11 9s) durability, providing secure and durable storage at a fraction of the cost of on-premises solutions. For data like ours, which is rarely accessed, Glacier offers a perfect balance between cost and accessibility.

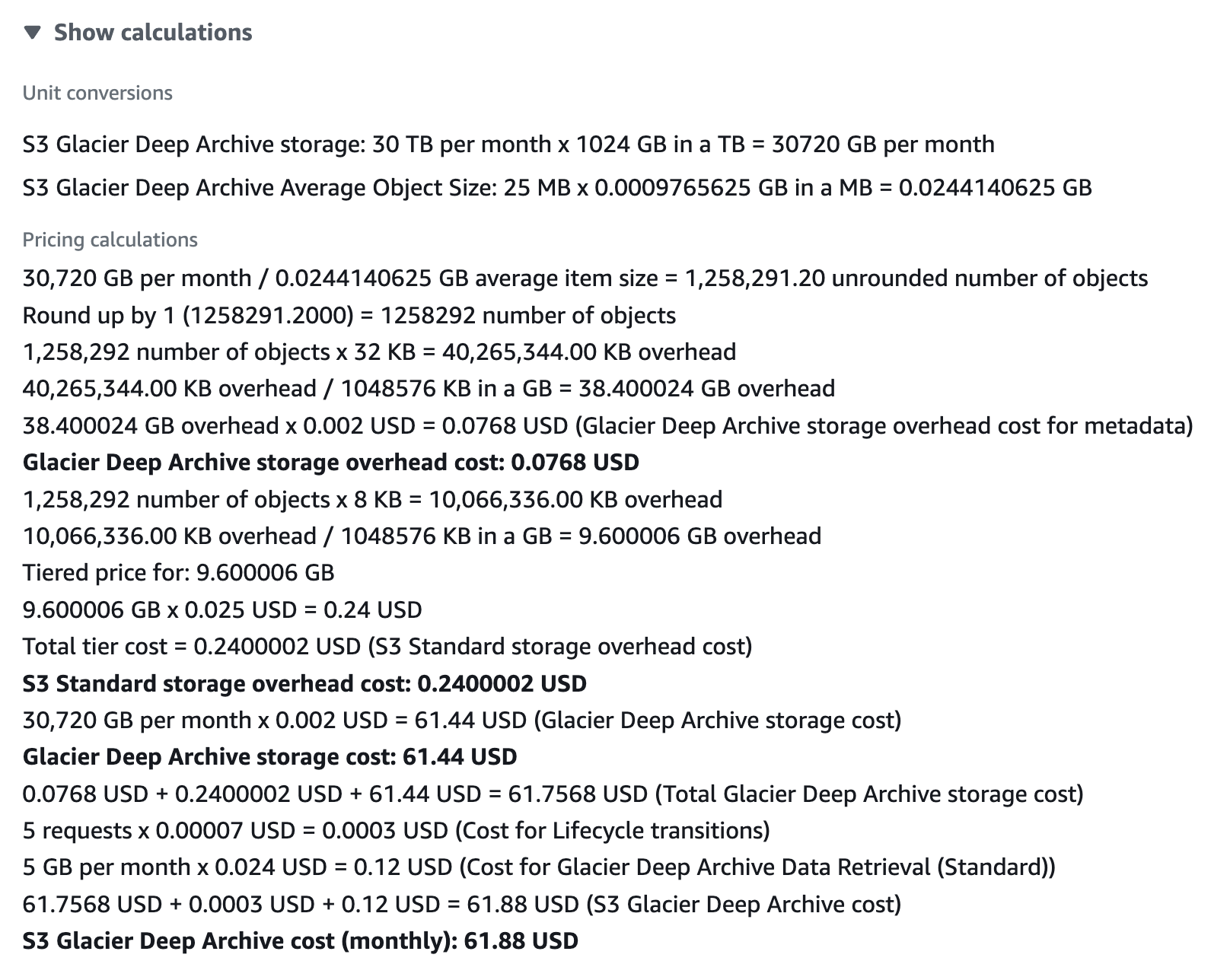

Amazon S3 Glacier has minimum capacity charges for each object depending on the storage class you use. For example, S3 Glacier Instant Retrieval has a minimum capacity charge of 128 KB for each object.

Amazon S3 also adds 32 KB of storage for index and related metadata for each object that is archived to S3 Glacier Flexible Retrieval or S3 Glacier Deep Archive storage classes.

Lifecycle Policies for Automation

Implementing Amazon S3 lifecycle policies automates the transition of data to Glacier based on age. For instance, files older than 15 days can be automatically moved to Glacier, optimizing storage costs without manual intervention.

In our case, we configured Amazon S3 lifecycle policies to automate the transition of data from S3 Standard to S3 Glacier. Given the initial 7-day accessibility requirement, set up a policy that transitions data to Glacier after this period, optimizing for both cost and compliance with access needs. Do keep in mind that the original files were individual files which were later grouped as a single bunch, the group can be retained for 7 days or moved to Glacier next day.

Best Practices and Storage Tier Considerations

Understanding Storage Tiers

- Amazon S3 Standard-IA: Ideal for data that is accessed less frequently but requires rapid access when needed.

- Amazon S3 Glacier and Glacier Deep Archive: Suited for data that is accessed infrequently. Glacier is cost-effective for long-term storage, with retrieval times ranging from minutes to hours. Glacier Deep Archive is the most economical option for archival data, with retrieval times of 12 hours acceptable in our case.

Cost Optimization with Intelligent Tiering

S3 Intelligent-Tiering dynamically moves data between two access tiers based on changing access patterns, ensuring cost efficiency without sacrificing access speed. This feature is particularly useful for data with unpredictable access patterns, ensuring that storage costs are always optimized.

Regular Storage Review and Optimization

Periodic reviews of storage utilization and costs help in identifying opportunities for cost savings and operational improvements. AWS Cost Explorer and S3 Storage Class Analysis tools can aid in these assessments, providing insights into storage trends and potential optimizations.

Data Retrieval Options

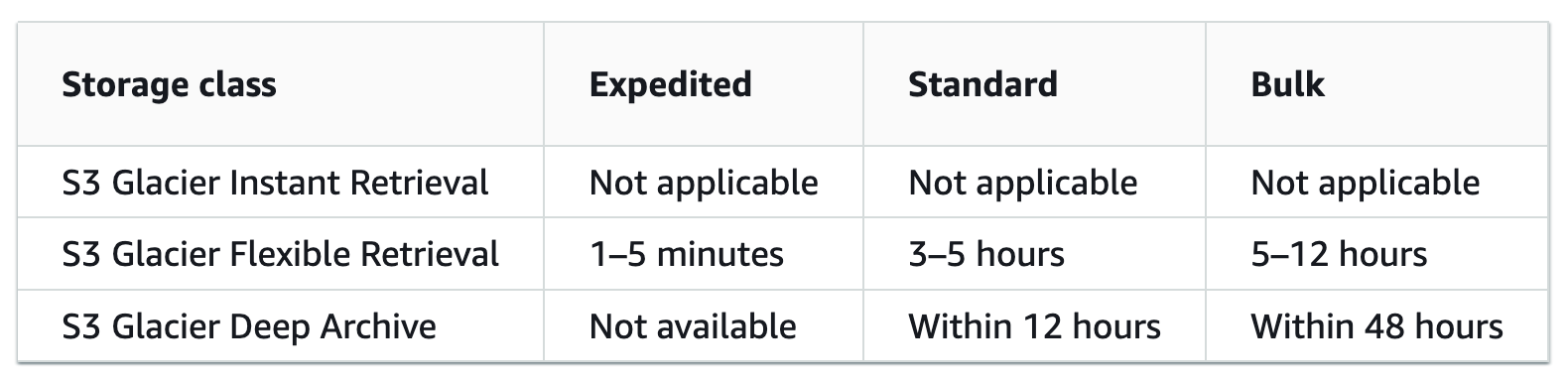

Glacier offers several retrieval options, from Expedited (available within minutes) to Standard (a few hours) and Bulk retrievals (5-12 hours), allowing businesses to balance cost against retrieval time based on their specific needs.

Security and Compliance

Ensuring data is stored securely and in compliance with industry standards is crucial. AWS provides various encryption options for data at rest in Glacier, along with comprehensive access control mechanisms. Additionally, AWS’s compliance certifications ensure that data storage meets rigorous standards.

Pricing Insights

Understanding the cost implications of using Glacier is crucial. Storage costs in Glacier are significantly lower than traditional or even cloud-based standard storage options. However, retrieval costs and the price for data transfer out of AWS should be considered. The AWS Pricing Calculator is an invaluable tool for estimating these costs based on specific use cases and access patterns.

Early Deletion Fees: S3 Glacier Flexible Retrieval archives have a minimum 90 days of storage, and archives deleted before 90 days incur a pro-rated charge equal to the storage charge for the remaining days.

S3 Glacier Deep Archive storage class has a minimum storage duration period of 180 days and a default retrieval time of 12 hours.

https://docs.aws.amazon.com/AmazonS3/latest/userguide/storage-class-intro.html

Summary

For businesses facing the challenge of storing large volumes of data for long-term purposes, Amazon S3 Glacier presents a viable and cost-effective solution. By grouping files, leveraging lifecycle policies for automated data management, and choosing the appropriate storage tier, organizations can achieve significant cost savings while ensuring data is secure and accessible when needed.

Regularly reviewing storage strategies and staying informed about AWS pricing and features will further optimize long-term data backup solutions. This comprehensive approach not only addresses the specific needs of storing 30 TB of data but also provides a scalable strategy for organizations managing extensive data archives.